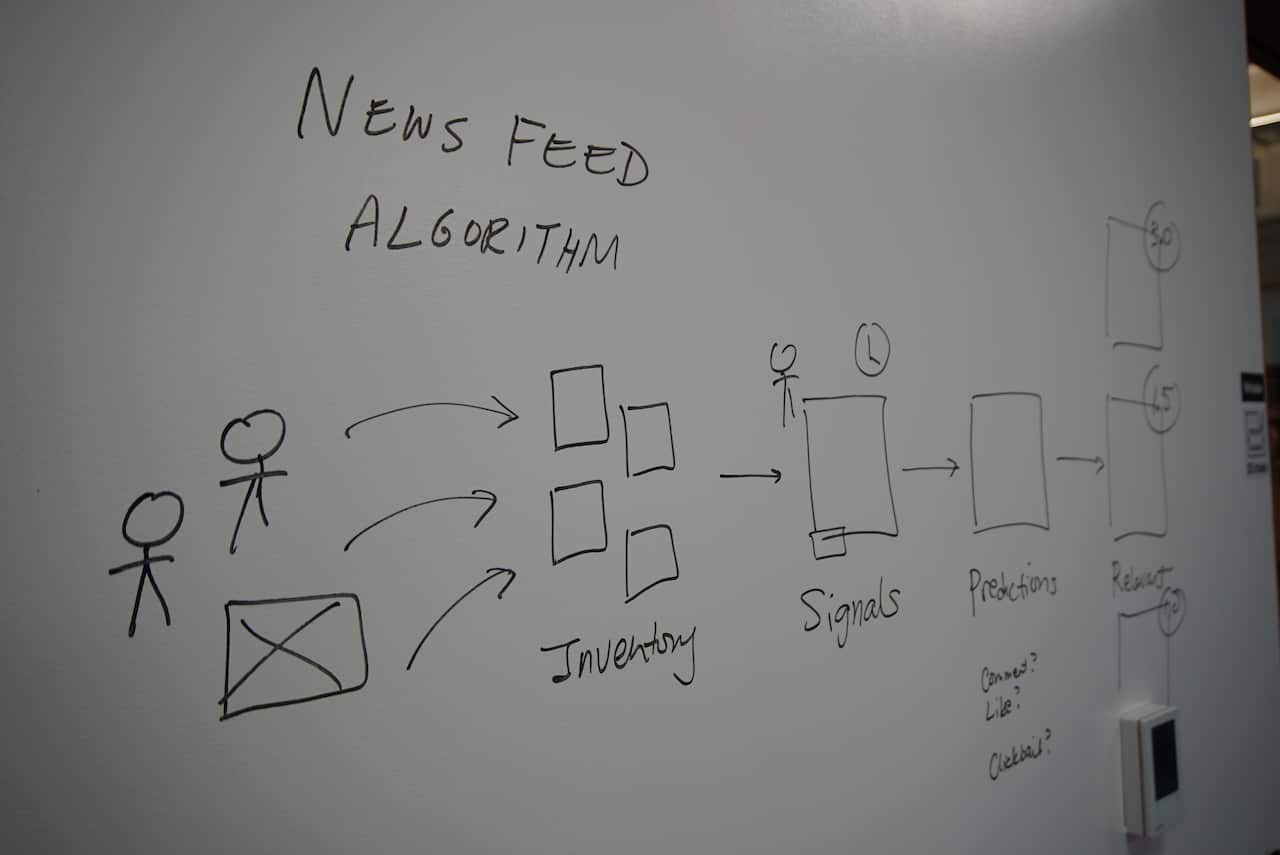

On a whiteboard inside Facebook’s sprawling Menlo Park campus in San Francisco, Sara Su is drawing a diagram to explain exactly how its newsfeed algorithm decides what you see.

Until recently, laying all this out for a journalist would have been unthinkable.

The algorithm looks for what users engage with - “signals”, the company’s news feed product manager calls them - such as which posts you and people in your friend circle ‘like’, share, or comment on.

“We can use these signals to make a series of predictions,” she says.

“From all of these signals and predictions, we come up with a number, that predicts how relevant this story is to you.”

How Facebook's news feed works. Source: SBS News

She’s blunt about why Facebook is baring all: “Trust can be lost in an instant, but it takes time to rebuild.”

The revelation earlier this year that political consultancy Cambridge Analytica had improperly harvested information from Facebook users, saw smouldering resentment over fake news, election manipulation and the ethics of Facebook’s business model erupt into a public relations bonfire for the social media giant.

The scandals prompted worldwide anger, saw younger users turn away, and caused Facebook’s stock value to plummet by nearly 20 per cent.

That fall reflected doubt; would people still willingly share their lives on Facebook?

Facebook HQ in Menlo Park, California. Source: SBS News

“Trust is the real currency these platforms depend on,” says analyst Paul Verna from the firm E-Marketer, which monitors Facebook’s fortunes.

“Because without that, you can’t build the social network, and you can’t attract advertisers.”

Trust is the real currency these platforms depend on.

Facebook’s response to the crisis has been a concerted push to better explain itself - opening up to the world on how things work as part of its push to regain users’ trust.

It has never been more important as the United States’ first midterm elections under President Donald Trump approach.

'Meaningful social interaction'

Sara Su explains a change Facebook CEO Mark Zuckerberg mandated even before the Cambridge Analytica scandal: re-weighting the newsfeed algorithm away from attention-grabbing content, and towards what Zuckerberg termed ‘meaningful social interaction’.

“[In the algorithm] we’re weighting stories that are more likely to spark conversations,” Ms Su says.

“That will result in them getting a higher relevance score, and they’ll start to show up earlier in people’s newsfeeds.”

Facebook CEO Mark Zuckerberg. Source: AAP

The openness is part of Facebook’s PR offensive, aimed at combatting what Stanford University social media professor Jeff Hancock terms “folk theories” – users’ decisions based on often incorrect understandings of how things work.

“If people have one idea of how the system works, but in fact the system works a totally different way, then people are going to stop using that product, like the Facebook news feed,” Professor Hancock tells SBS News.

But the last fortnight has brought sobering news for Facebook on how long rebuilding its reputation may take.

A new survey from the Pew Research Centre shows 53 per cent of the adult Facebook users in the US still don’t understand how it works. That confusion has often driven criticism that Facebook has been too slow to remove wrong or hateful material.

53 per cent of adult Facebook users in the US still don’t understand how it works.

It has also allowed conspiracy theories to flourish, like one viral hoax which tells users that the algorithm change limits their feed to posts from 25 friends.

The Pew survey also finds nearly three quarters (74 per cent) of American adult users say they’ve deleted their Facebook accounts or cut back on their usage in the last 12 months.

Having acknowledged its failure to spot and stop Russian-coordinated meddling in the 2016 Presidential election, part of Facebook’s challenge has been accepting the enormous responsibility that comes with being a platform for 2.2 billion active users – deciding what people can and cannot say.

In keeping with its transparency push, the company has published the guidelines its reviewers follow, so people know how Facebook assesses questionable material.

Separately, it points to major spending on teams of content reviewers, and machine learning tools capable of flagging and removing suspect material.

Those investments are paying off says Monika Bickert, Facebook’s Vice President in charge of content rules, telling SBS News it removed a staggering 583 million fake accounts in the first quarter of 2018 alone.

Source: SBS News

Ms Bickert says Facebook sees misinformation as a ‘global issue,’ acknowledging the platform’s use by groups inciting violence against Muslims in Sri Lanka, and Rohingya Muslims in Myanmar.

Criticised for being unresponsive to complaints from minority groups, Facebook has now hired more content moderators who can understand local languages, and take down hateful material.

Midterm challenge looming

As America’s midterm elections approach, senior figures associated with Facebook are warning that it faces increasingly sophisticated attempts to sow misinformation and division.

“The techniques that were used in election interference in 2016 are probably like only a percentage of what is going to come,” said co-founder of the Facebook-owned Instagram, Mike Krieger, recently.

“But at least what I’m seeing, internal at Instagram, internal at Facebook, is that people are thinking about this a whole lot more than they were in 2016.”

Indeed, Facebook has made several recent announcements heralding the detection and removal of “inauthentic” Russian and Iranian-linked pages.

“We’re definitely getting better at finding this sort of activity and stopping it,” Ms Bickert says.

But pressed on whether users are responding to the company’s transparency push, she acknowledges public opinion remains sceptical.

“In terms of communicating with people, I think there is more we need to do,” she says.

“We’re trying every day to engage, we’re trying to get the message out there that we’re taking this seriously.”

Facebook’s harshest critics believe the company is reaping what it has sown, seeing its present problems as the consequence of pursuing growth at all costs.

But within, there remains a firm belief that by acknowledging wrongdoing and working to atone for its mistakes, Facebook can recover.

“Its always hard to read negative press about the work that you’re doing,” Ms Su says, in front of her whiteboard.

“But I think that really underlines for all of us on this team how important this work is, and that keeps us going.

“We’re in it for the long haul.”